Do you want to optimize the SEO of your site or analyze your competitors?

Let's discover Screaming Frog SEO Spider, a powerful SEO tool that will allow you to explore the SEO of the sites you will crawl.

What is Screaming Frog?

Screaming Frog is what we can call a crawler, i.e. an SEO tool that will crawl the URLs of your site or sites you want to analyze and find different types of errors such as H1 duplicated, 404s, titles too short ...

This tool's goal will be to have an SEO Friendly site with as few errors as possible. This technical optimization step is vital to increase your visibility significantly in search results.

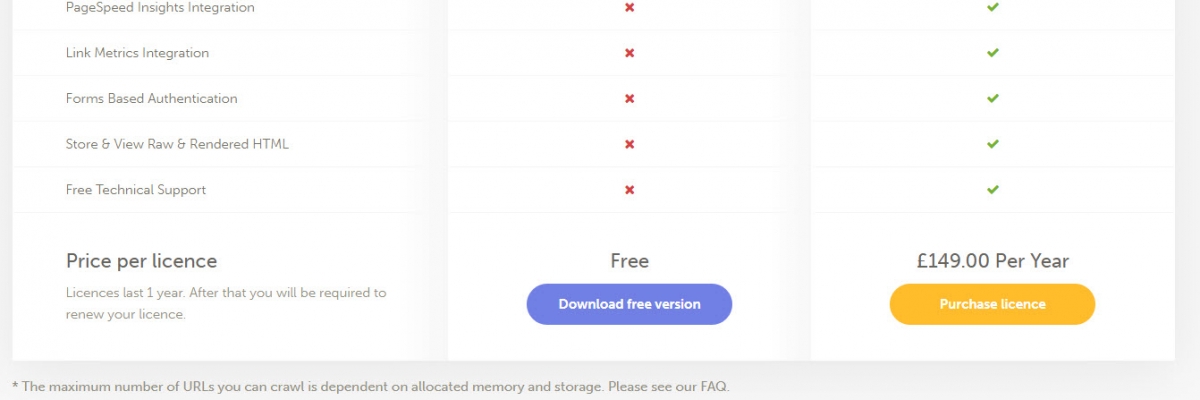

Screaming Frog pricing

The software is free for basic usage, but if you want to use more advanced features you can use the premium version £149.00 Per Year

The software is free for basic usage, but if you want to use more advanced features you can use the premium version £149.00 Per Year

A more budget option for this software is our Screaming Frog group buy service. You can use premium version at only $20/year.

Download Screaming Frog

Before entering this tool's functionalities, you will have to download and install it on your computer. You can download it here.

Configuring Screaming Frog

A suitable configuration of your tool will be essential to analyze a site in-depth and obtain all the data you need. Find out step by step how to configure it to get the most out of it.

Start your first Screaming frog crawl:

When arriving on the tool, the first thing you will be able to see is a bar in which you can enter the URL of the site you want to analyze:

you just have to enter your URL and select start to launch your first Crawl. Once started, if you're going to stop it, you just have to click on Pause then Clear to reset your Crawl to 0.

Configure your Screaming Frog SEO Spider:

There are many possibilities to configure your crawler so that it only returns the information that interests you. This can be very useful when you crawl sites with a lot of pages or just want to analyze a subdomain.

To do this, go to the top menu and select Configuration.

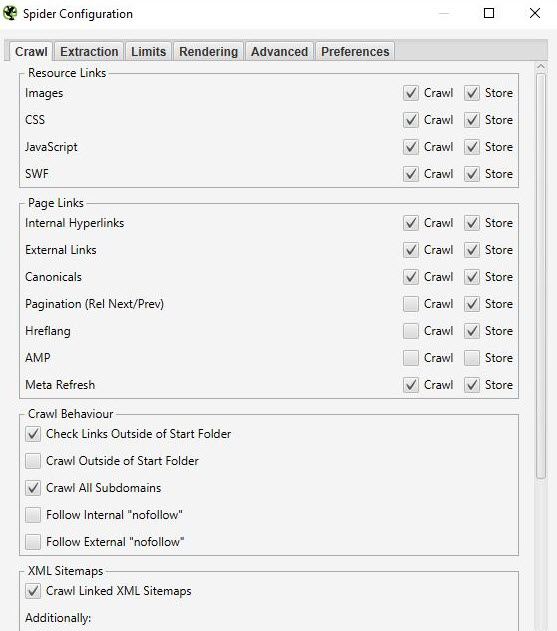

1 / Spider

CRAWL

Spider is the first option that is available when you enter the setup menu. This is where you can decide which resources you want to crawl.

You should normally have a window that looks like this:

- Resources links:

You can for example, decide not to crawl the images of the site. Screaming Frog will thus exclude from its Crawl all the IMG: elements (img src =" image.jpg"). Your Crawl will thus be faster, but you will not be able to carry out analysis on the images' weight, the alt attributes of the images ...

It is up to you to choose from this what you need to analyze.

Good to know: It may still happen that you have some images that go back if they are in the form of a href and do not have an img src

In the screenshot above, what is in yellow will not be crawled on the other hand the link of the image with the red cross will be crawled all the same

On the majority of your crawls, you will have a fairly basic configuration in this window. We recommend checking the crawl and storage of images, CSS, javascript and SWF files for in-depth analysis.

- Links page:

In this part, you can also decide what you want to crawl in terms of links. For example, you can say that you don't want to crawl canonicals or external links. Again, here it's up to you what you want to analyze.

Example:

Crawl with external links and without external links:

Crawl with external links

Without external links

- Crawl Behavior

In this part, you can give instructions to the tool to crawl specific pages of your site.

Example:

You have subdomain pages and still want to crawl them for analysis. By checking Crawl All subdomains, Screaming Frog, if there is a link to these pages on your site will be able to send them back to you in its crawler.

Below you can see a site which has a subdomain of type: blog.name domain. By selecting Crawl all subdomains before launching our Crawl, the application also brings out all the subdomain URLs.

- XML Sitemaps

Do you have a Sitemap on your site? It could be interesting to analyze the differences between what you have in your Sitemap and the URLs that Screaming Frog finds.

This is where you can tell Screaming Frog where your Sitemap is so it can analyze it

To do this, you just have to:

1- Check Crawl Linked XML Sitemaps

2- Check Crawl these sitemaps

3- Enter the URL of your sitemap

EXTRACTION

In this part, you will be able to choose what data you want Screaming Frog to extract from your site and go up in its interface.

Example:

If you uncheck Word Count, you will no longer have the word count of each of your crawled pages

Normally you should not modify this part too much because in general, the information extracted from your site which is selected by default, is the main one.

It can sometimes be interesting if you want to do an in-depth SEO audit to select the information related to Structured data: JSON-LD, Microdata, RDFa, Schema.org Validation.

You will be able to bring up valuable information on the implementation of microdata on your site such as: the pages that do not have microdata, the associated errors, the types of microdata per page

LIMITS

In this third tab, it is possible to define limits for the crawler. This can be particularly useful when you want to analyze sites with a lot of pages.

- Total crawl limit:

This corresponds to the number of URLs that screaming can crawl. When we analyze a site, it does not necessarily make sense to limit to several URLs. This is why in general we do not touch this parameter

- Limit Crawl Depth:

This is the maximum depth you want the crawler to be able to access. Level 1 being the pages located one click away from the homepage and so on. We hardly ever change this parameter because it is interesting to look precisely in an SEO audit at the site's maximum depth. If it is too high, associated actions will have to be carried out to reduce it.

- Limit Max Folder Depth:

In this case, this is the maximum folder depth you want the crawler to be able to access

- Limit number query string:

Some sites may have parameters of type? X =… in their URLs. This option will allow you to limit the Crawl to URLs containing a certain number of parameters.

Example: This URL https://www.popcarte.com/cartes-flash/carte-invitation/invitation-anniversaire-journal.html? age = 50 & format = 4 contains two parameters age = and format =. It could be interesting to limit the Crawl to a single parameter to avoid crawling tens of thousands of URLs.

This option is also very useful for e-commerce sites with many pages and listings with cross-links.

- Max redirect to Follow:

This option allows you to define the number of redirects that we want our crawler to be able to follow

- Max URL Length to Crawl:

By modifying this field, you can select the length of the URLs you want to crawl. On our side, we hardly ever use it except in very specific cases.

- Max links per URL to Crawl:

Here you can control the number of links per URL that the Screaming Frog will be able to crawl

- Max page Size to Crawl:

Here you can choose the maximum weight of the pages that your crawler will be able to analyze

RENDERING

This window will be particularly useful if you want, for example, to crawl a site running a javascript framework like Angular, React, etc.

If you analyze a site of this type you can configure your crawler in this way:

Screaming Frog will take screenshots of the crawled pages, which you can then find in your crawl result by clicking on the URLs which go up and selecting rendered page.

If you see that your page rendering is not optimal in this part, look at the blocked resources in the left frame, and you can try changing the AJAX TIMEOUT.

ADVANCED

If you have made it this far, you already have a nice configuration for your crawler. However, if you wish to go even further, you can still manage certain parameters in this "Advanced" part.

Here are some configurations that might be useful:

- Pause on High memory usage :

This option is pre-checked by default in your screaming frog configuration panel. It will be particularly useful and can be triggered when you crawl large sites. Indeed Screaming Frog, when it reaches the limit of its memory will be able to pause the crawl process and warn you so that you can save your project and continue it if you wish.

- Always follow redirect :

This option is useful in case you have redirect chains on the site you are analyzing.

- Respect noindex, canonical and next-prev :

In this case, the crawler will not show you in the results of your Crawl the pages that contain these tags. This can again be interesting if you are analyzing a site with a lot of pages.

- Extract images from img srcset attribute :

The crawler in this case will extract images with this attribute. This attribute is mainly used in cases of responsive management of a site. It's up to you to see if it is relevant to recover these images

- Response Timeout (secs):

This is the maximum time that the crawler will have to wait for a page to load on the site. If this time is exceeded Screaming Frog may return a code 0 corresponding to a "connection timeout."

- 5xx response retries:

This corresponds to the number of times that screaming Frog must retest a page in the event of an error 500

PREFERENCES

In this part, you will be able to really define what Screaming Frog should report in error.

For example, you can define the maximum image weight from which we can consider it a problem or the maximum size of a meta description or a title.

Here it is up to you to define your SEO rules according to your experience and your desires.

2 / Robots.txt

Congratulations on making it this far. The first part was not easy but will be vital for a good configuration of your crawler. This part will be much simpler than the previous one.

By selecting Robots.txt from the menu, you will configure how Screaming Frog should interact with your robots.

SETTINGS:

You should normally see this when you get to the Robots settings section

The setup here is relatively straightforward. In the drop-down list you can tell Screaming Frog whether or not to respect the indications of the robots.txt file.

For example, the case of ignore robots.txt could be useful if the site you want to crawl does not allow Screaming to crawl it for some reason. By selecting ignore robots.txt, Screaming Frog will not interpret it, and you can normally crawl the site in question.

The case of robots.txt respect is the most recurring case, and we can then say with the two checkboxes below if we want to see in the reports the URLs blocked by the robots.txt that they are internal or external.

CUSTOM:

In this control panel, you can simulate your robots.txt yourself. This can be particularly useful if you want to test changes and see the impacts during a crawl.

Note that these tests will not impact your robots.txt file, which is online. If you want to modify it you will have to do it yourself after having done your tests in screaming Frog for example.

Here is an example of a test:

We added a line to prevent crawling of any URL containing / blog /

If you look at the bottom, you will see that the URL was blocked during the test we carried out with keyweo.com/fr/blog.

3 / URL Rewriting

This feature will be very useful if your URLs contain parameters. In the case for example, of an e-commerce site with a listing with filters. In this part, you can ask Screaming Frog to rewrite the URLs in flight.

REMOVE PARAMETERS

You can tell the crawler in this part to remove certain parameters to ignore them. This could be particularly useful for sites with UTMs in their URLs.

Example:

If we want to remove the UTM parameters in our URLs, you can put this Configuration:

Then to test if it works, you just have to go to the test tab and put an URL that contains these parameters to see what it gives:

REGEX REPLACE

In this tab, you will be able to replace parameters on the fly by others. You can find many examples in the screaming frog documentation here

4 / Include and Exclude

At Keyweo, we use these features a lot. This is especially useful for sites with a lot of URLs if you only want to crawl certain parts of the site. Screaming Frog can thus, via the indications you make in these windows, only show certain pages in its list of crawl results.

If you manage or analyze large sites, this functionality will be very important to master to save you many hours of crawling.

The interface of include and exclude looks like this:

You can list the URLs or URL patterns to include or exclude in the white frame by putting one URL per line.

For example, if I only want to crawl on Keyweo the URLs / fr / here is what I can put in my include window:

As with the URL rewriting, you can see if your include works by testing a URL that should not be integrated into the Crawl:

Our example above shows that the include works since URLs containing / es / are not taken into account.

In the event that you wish to exclude certain URLs, the procedure will be similar.

5 / Speed

You can choose how fast you want Screaming Frog to crawl the site you want to crawl in this part. This can be useful when you want to analyze large sites again. Leaving the default configuration could take a long time to browse this website. By increasing the Max Threads screaming Frog will be able to browse the site much faster.

This Configuration should be used with caution because it can increase the number of HTTP requests made to a site which could slow it down. It can also crash the server in extreme cases, or the server could block you.

In general, we recommend that you leave this setting between 2 and 5 of Max Threads.

Also, note that you can define a number of URLs to crawl per second.

Do not hesitate to contact the technical teams who manage the site to better manage the Crawl of the site and avoid overloading.

6 / User agent configuration

In this part, you can define with which user agent you want to crawl the website. By default it will be Screaming Frog but you can for example crawl the site as Google Bot (Desktop) or Bing Bot

This could be useful for example in the case where you cannot crawl the site because possibly the site blocks crawls with screaming Frog.

7 / Custom Search and Extraction

CUSTOM SEARCH

If you want to do specific analyzes by highlighting pages containing certain elements in their HTML code, you are going to love Custom Search.

Examples of use:

You want to highlight all the pages containing your UA-Google analytics code. It might be smart to do a search via screaming by putting your UA code in the search window. This could for example be useful for you to see if some of your pages do not have the analytics code installed.

You are a blog on the topic of birth and wish to see on which pages the word "baby box" appears in order to then optimize the pages which contain it or conversely those which do not contain it in order to better position you on this request. So you just have to put in your custom search contain window: "baby box"

You will see this in your screaming frog custom search:

If you look in the column "Contains Baby Box" you will see that screaming Frog has listed the pages containing baby boxes. I let you imagine what it can serve you later in your SEO optimizations

EXTRACTION

Screaming Frog via its Extraction feature can also allow you to scrape certain data to use them in your further analyzes. For example, we increasingly use this tool at the agency for certain specific analyzes.

To scrape this data, you just have to configure your extractor by telling it what it should retrieve via an Xpath, a CSSpath or a regex.

You will find all the information for this Configuration via this article: https://www.screamingfrog.co.uk/web-scraping/

Examples of uses:

You want to retrieve the publication date of an article and then decide to clean or re-optimize certain articles. This feature will allow you to retrieve this information in your list of screaming frog results and then, for example, to export them to Excel. Great no?

You want to export the list of your competitors' products which are on their listing pages and which are in h2. Once again you can simply configure your extractor to retrieve this information and then have fun with that data in Excel.

The possibilities of using this extraction feature are truly limitless. It's up to you to be creative and to know how to master it perfectly.

8 / API Access

It is in this window that you can configure data feedback from other tools by configuring your API access. For example, you can easily upload your google analytics, google search console, majestic SEO or even ahrefs data in your tables with the information for each URLs. As much to say to you that it will multiply the power of your analyzes and audit.

Only small downside, be careful to look at your API credits on your different tools. It can go very quickly.

9 / Authentication

Some sites require a login and password to be able to access all of the content. Screaming Frog allows you to manage the different access cases via password and username.

BASIC AUTHENTICATION CASE

In most cases, you will not have to configure anything since by default, if the screaming Frog encounters an authentication window, it will ask you for a username and password to be able to perform the Crawl.

Here is the window you should have:

All you have to do is simply enter the username and password, and screaming Frog can perform its Crawl.

In some cases there may be a blockage via the robots.txt. You will then have to refer to point 2 / that we have seen to ignore the robots.txt file

CASE OF AN INTERNAL SITE FORM

In the case of a form internal to the site, you can very simply enter your accesses in the authentication> form-based part to then allow the crawler to access the pages you want to analyze.

10 / System:

STORAGE

This functionality is also important to master in case you need to crawl sites with more than 500,000 URLs.

You have in this window two options: Memory storage and database Storage

By default Screaming Frog will use Memory storage which amounts to using your computer's RAM to crawl the site. This works great for sites that are not too big. However, you will quickly reach the limits for sites beyond 500,000 URLs. Your machine may be slowed down quite a bit, and the Crawl will last a relatively long time. Believe in our experience.

In the other case, we recommend that you switch to database Storage, especially if you have an SSD disk. The other advantage of database storage is that your crawls can be saved and easily found from your screaming frog interface. Even if you stop the Crawl, you can resume it. It can save you in some cases from long crawling hours.

MEMORY

This is where you will define the memory that screaming Frog can use to operate. The more you increase the memory, the more screaming Frog will crawl a large number of URLs. Especially if it is configured in Memory storage.

11 / The crawl mode:

SPIDER

This mode is the basic Configuration of screaming Frog. Once entered your URL, screaming Frog will follow the links of your site to browse it entirely.

LIST MODE

This mode is really very practical because it allows you to tell screaming Frog to browse a specific list of URLs.

For example, if you want to check the status of a list of URLs you have, all you have to do is upload a file or copy-paste your list of URLs manually.

SERP MODE

This mode does not involve a crawl. It allows you to upload a file with for example your titles and meta description to see what it would give in terms of SEO optimization. It can be used for example, to check the length of your titles and meta description after modifications on excel for example.

Analyze your data from the Crawl

The screaming frog interface:

Before going headlong into the analysis of the data presented to you by screaming Frog, it is important to understand how the interface is structured.

Normally you should have something like this:

Each part has its own specific use and which will allow you not to get lost in all the available data:

Part A (in green) : This part corresponds to the list of crawled resources with for each column SEO indications which are specific to each row. In general, it is here that you will find the list of your pages and the information specific to each page, such as the status code, the indexability of the page, the number of words… This view will change depending on what you select in the part C.

Part B (in blue) : It is in this part that you will be able to have a more detailed vision of each resource that you analyze by clicking on it. For example, you will have a preview for a page of its links, the images present, and many other information that can be very useful.

Part C (in red): This part will be important to watch to carry out your analysis because it is here that you will be able to see point by point what can be optimized and screaming Frog will also give you some help to facilitate your analysis. Example: Meta description missing, H1 multiples…

To find your way around the analysis of your data, we recommend that you go via part C

The overview (Part C):

1 / The Summary:

In this part you will be able to have a global vision of the site including the number of URLs of the site (images, HTML ...), the number of external and internal URLs blocked by the robots.txt, the number of URLs that the crawler has could find (internal and external)

This will give you valuable information on the site at a glance.

2 / SEO Elements: Internal

You will find in this part all the internal URLs to your site.

Html

It is generally very interesting to look at the number of HTML URLs. You could for example, compare your number of URLs with the number of pages indexed on google with the command site:. This could give you very valuable information if a lot of pages are not indexed on google, for example.

By clicking on HTML you will see that your left part will contain a lot of very important information to analyze such as:

=> Status Code of the page

=> Indexability

=> Information on titles (Content, size)

=> Information on meta description (Content, size)

=> Meta Keyword: no longer necessarily very useful look unless they are still in use by the site in question. You could for example clean these tags

=> H1 (The content, the size, the presence of a second h1 and its size)

=> H2 (The content, the size, the presence of a second h2 and its size)

= > Meta Robots

=> X Robots Tag

=> Meta refresh

=> The presence of a canonical

=> The presence of a real next or rel prev tag

=> The size of the page

=>

=> The text ratio: proportion between text and code

=> Crawl Depth: The depth of the page in question in the site. The home being in level 0 if you start the Crawl with this page. A page with 1 click of the home will be in-depth 1 and so on. Knowing that it is generally interesting to reduce this depth in your site

=> Inlinks / unique inlinks: number of internal links pointing to the page in question

=> Outlinks / Unique outlinks: number of links leaving this page

=> Response time: page response time

=> Redirect URL: page to which the page is redirected if there is a redirection

You can also find all these elements by browsing the various elements in the right column.

If you connect to the APIs of the search console, google analytics, ahrefs… you will also have other columns available that would be very interesting to analyze.

JAVASCRIPT, IMAGES, CSS, PDF ...

By clicking on each part, you will be able to have interesting information on each type of resource on your site.

3 / SEO Elements: External

The external part corresponds to all URLs leading outside your site. For example, this is where you can find all the external links you make.

For example, it could be interesting to analyze the outgoing URLs of your site and look at their status. If you have 404s, it might be a good idea to correct your link or remove it to avoid directing to broken pages.

4 / Protocol:

In this part, you will be able to identify the HTTP and HTTPS URLs.

If you have a lot of HTTP URLs or duplicate pages in HTTP and HTTPS, it would be useful to manage the redirection of your HTTP pages to their HTTPS version

Another point if you have HTTP URLs that point to other sites, you could check if the HTTP version of these sites does not exist and replace the links.

4 / Response Code:

The Response Codes section groups your pages by code type.

=> No response: This generally corresponds to the pages that screaming Frog could not crawl

=> Success: You should have here all your pages in status 200. That is to say that they are accessible

=> Redirection : You should have in this part all the pages in status 300 (301, 302, 307…). It could be interesting to see how to reduce this number if possible to send google directly to pages with status 200

=> Client Error: You will have here all the pages in error with for example a status of 404. It will be important to look at the cause and make a redirect.

=> Server Error : All the pages reporting a server error

5 / URL:

In this section, find the list of your URLs and the potential problems or associated improvements.

For example, you will be able to see your URLs with capital letters or your duplicated URLs

6 / Titles page:

Here you will have a lot of information about your titles and can optimize them to make them much more SEO friendly.

Here is the information you can find:

=> Missing: This will correspond to your pages which have no title. You will therefore have to make sure to add them if possible

=> Duplicate: Here you will have all the duplicated titles of your site. The ideal is to avoid having them. So it's up to you to see how to optimize them to avoid this duplication

=> Over 60 Characters: Beyond a certain number of characters, your titles will be cut in the results of Google. So make sure they are around 60 characters to avoid problems

=> Below 30 Characters:You will find here the titles that you could optimize in terms of size. Adding a few strategic words by getting closer to 60 characters could allow you to optimize them

=> Over 545 Pixels / Below 200 Pixels: A bit like the previous elements, this gives you an indicator of the length of your titles but this time in pixels. The difference being that you could optimize your titles by using letters with more or fewer pixels to maximize space.

=> Same as H1: This means that your title is identical to your h1

=> Multiple: If you have pages containing several titles, this is where you can identify this problem and see the pages to correct

6 / Meta description:

On the same model as the titles you will have in this section many indications to optimize your meta description according to their size, their presence or the fact that they are duplicated

7 / Meta Keywords:

This is not necessarily useful to take this into account unless the pages seem to have a lot of them to does optimize them.

Up to you

8 / H1 / H2:

In these parts, you can see if there are any optimizations to be carried out on your Hn tags.

For example, if you have several h1s in the same page, it could possibly be interesting to think about keeping only one if that makes sense. Or if you have duplicate h1s between your pages, you could make sure to rework them

9 / Pictures:

You can, by analyzing this part in general find optimizations to carry out on your images, such as for example reworking your images of more than 100kb or putting alt on the images which do not have any.

10 / Canonical:

A canonical is used to indicate to Google that it must go to this or that page because it is the original. To take an example, you have a page with parameters, and this page has duplicate content of your page without parameters. You could put a canonical on the page with parameters to tell google that the page without parameters is the main one and that it should reflect the original page instead.

In this part of screaming Frog, you will have all the information concerning the management of the canonicals of your site.

11 / Pagination:

Here you will have all the information concerning the pagination on your site so that you can manage it as well as possible.

12 / Directives:

The Directives section shows the information contained in the meta robots tag and X-Robots-Tag. You will be able to see the pages in no index, the pages in no follow ...

13 / Hreflang:

When you audit a site in several languages, it is very important to see if the site has the hreflang tags to help Google understand which language is used on the site even if we can assume that it is more and more able to understand it himself.

14 / AMP:

Your AMP pages will be found here and you will be able to identify associated SEO issues

15 / Structured data:

To have information in this part you must first activate in your spider configuration the elements specific to structured data: JSON-LD, Microdata, RDFa

You will then have a lot of information to optimize your implementation of structured data on the site.

16 / Sitemaps:

In this part, you will be able to see the differences between what you have on your site and in your Sitemap.

For example, it is a good way to identify your orphan pages.

To have data in this part, you must also activate the possibility of crawling your Sitemap in the crawler configuration and to simplify the thing add the link to your Sitemap directly in the Configuration.

16 / Pagespeed:

By using the page speed insight API, you can very simply identify the speed problems of your pages and remedy them by analyzing the data present in this tab

17 / Custom Search and Extraction:

If you want to scrape elements or do specific research in the code of a page, this is where you can find the data

18 / Analytics and Search Console:

By also connecting screaming Frog to the APIs of Analytics and Search Console, you can find a lot of very useful information in these tabs.

For example, you will also find here orphan pages present in your tools but not in the Crawl or pages with a bounce rate greater than 70%

19 / Link Metrics:

Connect Screaming Frog to the Ahrefs and Majestic APIs and highlight in this part information about the netlinking of your pages (example: Trustflow, quote flow…)

Additional features of Screaming Frog

Analyze the depth of a site:

If you want to see the depth of your site it can be very interesting to look in the Site structure tab in the right part next to Overview:

In general, it is preferable to have a site with the majority of the pages accessible in 3 - 4 clicks. In our example above for example we should optimize the depth because there are pages in-depth 10+

Spatial vision of your site:

Once your Crawl is finished, it is sometimes interesting to go to Visualizations> Forced directed crawl diagram to have an overview of your site in the form of a node

All the little red dots, for example can be analyzed to understand if there is a problem or not.

This vision can also lead you to think about the organization of your site and its internal network.

While spending a little time with this visualization, you can find many very interesting optimization avenues.

Conclusion Screaming Frog

Screaming will therefore be an essential tool for any good SEO. The tool if you master it in depth will allow you to do many things ranging from technical SEO analysis to site scraping.